Free Blogs

Create Robots txt File Step-by-Step + Free Template to Download

Want to take control of how search engines see your website? Learning how to create robots txt file is one of the smartest first steps in mastering search engine optimization. This tiny file holds major power—it can guide crawlers, boost your SEO strategy, and protect sensitive parts of your site from being indexed.

In this post, you’ll learn exactly what a robots.txt file is, how it works, why it matters, how to create robots txt and test it, and how to use it properly to improve your SEO performance. Whether you’re a beginner or an experienced SEO specialist, this guide is packed with value and practical insight from my hands-on experience working on dozens of websites.

What Is a Robots.txt File?

The robots.txt file is a plain text document that tells web crawlers (also called search engine bots) which parts of your site they are allowed—or not allowed—to access. It lives in the root directory of your website (Public HTML) and acts as a set of instructions for search engines.

To define robots txt simply: it’s a gatekeeper for your website’s content.

Why Is Robots.txt Important for SEO?

Used properly, a robots.txt file can:

- Prevent search engines from crawling low-value or duplicate pages

- Optimize crawl budget, ensuring bots spend time on your most important content

- Protect sensitive directories from being indexed

- Help improve overall site visibility in search results

In short, best robots txt for SEO gives you more control over what gets indexed and how bots navigate your site.

How Search Engine Crawlers Work

To understand why this file matters, let’s briefly look at robots.txt rules from the bot’s perspective.

Search engine bots like Googlebot, Bingbot, and DuckDuckBot crawl your website to collect information and store it in their index. When a bot lands on your site, the first file it looks for is robots.txt.

Here are some popular bots you might want to manage with this file:

- Googlebot – Google’s main web crawler

- Bingbot – Used by Bing

- Yandex Bot – Used in Russia

- Baidu Spider – Focused on Chinese websites

- Facebook External Hit – Crawls for Facebook link previews

Using a well-structured robot txt template, you can control their behavior to your advantage.

💡 Pro Tip: If you haven’t connected your website to Google Search Console yet, now’s the time to do it. Google Search Console still helps you understand how your robots.txt file affects page indexing. You can see which pages are blocked from crawling and identify technical SEO issues more easily.

Check out my step-by-step visual guide here:

👉 Connecting Google Search Console to Your Website: 2 Visual Methods

Robots.txt Format & Syntax

To create robots txt you need to know what codes are writen in it. Here’s a simple breakdown of how a robots.txt file is structured:

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-login.php

Allow: /wp-admin/admin-ajax.php

Sitemap: https://yourdomain.com/sitemap_index.xml

Explanation:

- User-agent: Specifies the crawler you’re targeting. Use * to apply rules to all bots.

- Disallow: Blocks access to specific URLs or directories.

- Allow: Grants access to specific files even if the folder is disallowed.

- Sitemap: Points to your XML sitemap for easier indexing.

Not sure how to set up a sitemap for your website? Don’t worry—I’ve written a step-by-step guide that walks you through the process.

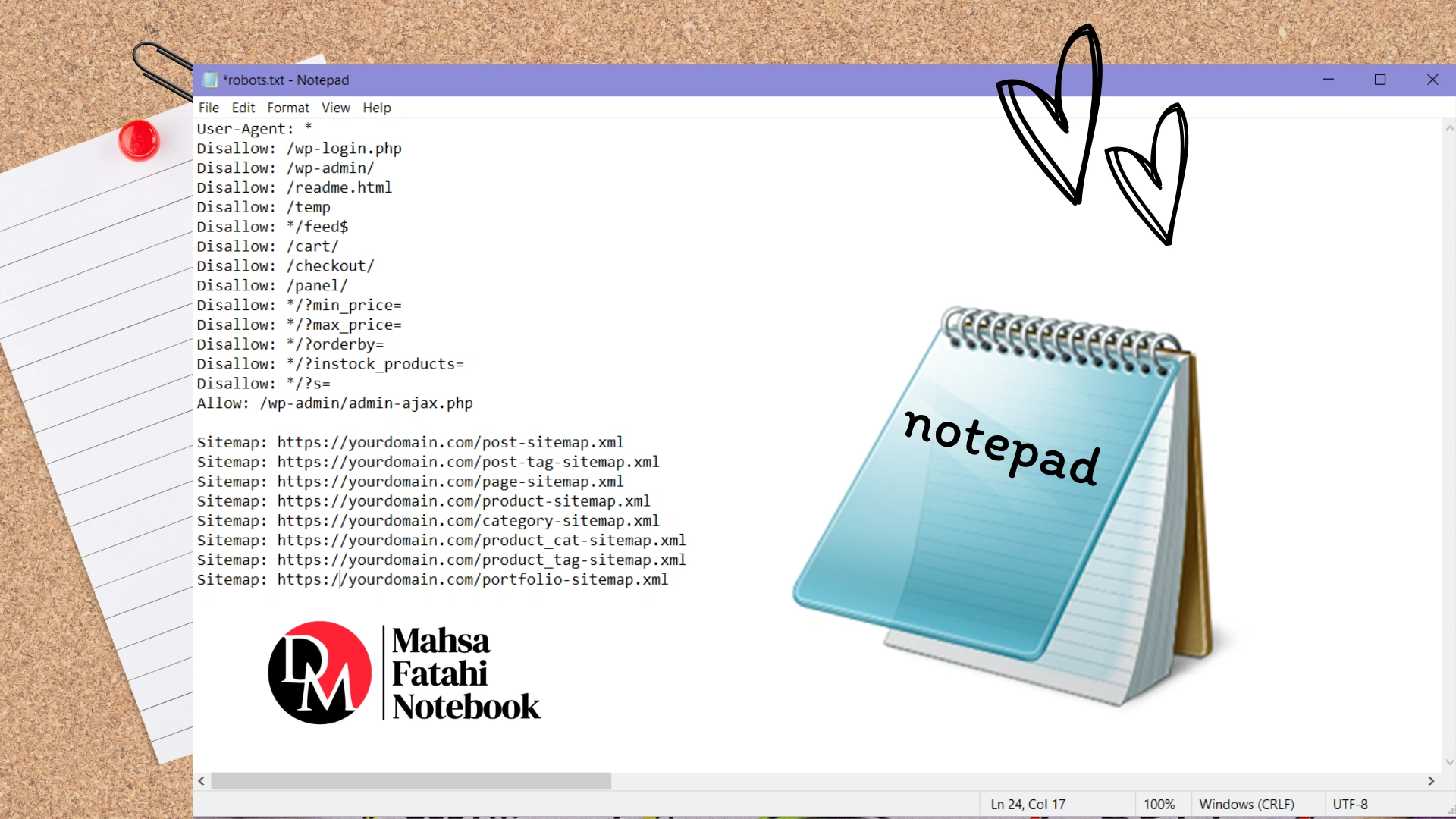

Create Robots txt by A Sample Configurations

Here’s a more detailed robots txt code used in WordPress or WooCommerce sites:

User-Agent: *

Disallow: /wp-login.php

Disallow: /wp-admin/

Disallow: /readme.html

Disallow: /temp

Disallow: */feed$

Allow: /wp-admin/admin-ajax.php

# Sitemap declaration

Sitemap: https://yourdomain.com/sitemap_index.xml

# Additional restrictions for specific pages and query parameters

Disallow: /cart/

Disallow: /checkout/

Disallow: /panel/

Disallow: */?min_price=

Disallow: */?max_price=

Disallow: */?orderby=

Disallow: */?instock_products=

Disallow: */?s=

You can also specify individual sitemaps for different post types instead of including all of them when you want to create robots txt. Here is a list of common sitemaps needed to be mentioned when you want to create robots txt file:

Sitemap: https://yourdomain.com/post-sitemap.xml

Sitemap: https://yourdomain.com/post-tag-sitemap.xml

Sitemap: https://yourdomain.com/page-sitemap.xml

Sitemap: https://yourdomain.com/product-sitemap.xml

Sitemap: https://yourdomain.com/category-sitemap.xml

Sitemap: https://yourdomain.com/product_cat-sitemap.xml

Sitemap: https://yourdomain.com/product_tag-sitemap.xml

Sitemap: https://yourdomain.com/portfolio-sitemap.xml

Note: As mentioned earlier, when you want to create robots txt, it’s better to include your sitemap address at the end of the directive. In this section, we’ve listed specific sitemaps for each post type, like product pages, pages, and tags. Why is this important? Because your website’s sitemap may include other post types that were created during the site design process. By carefully selecting which post types to include, you can ensure that only the most important ones are submitted for crawling.

How to Create Robots TXT File

Here’s how you can make a robots txt step by step:

- Open any text editor like Notepad on your PC

- Add your custom rules using the structure shown above

- Save the file as robots.txt

You can also use plugins like Yoast SEO robots txt or Rank Math robots txt editor within WordPress to build or modify it directly from the dashboard.

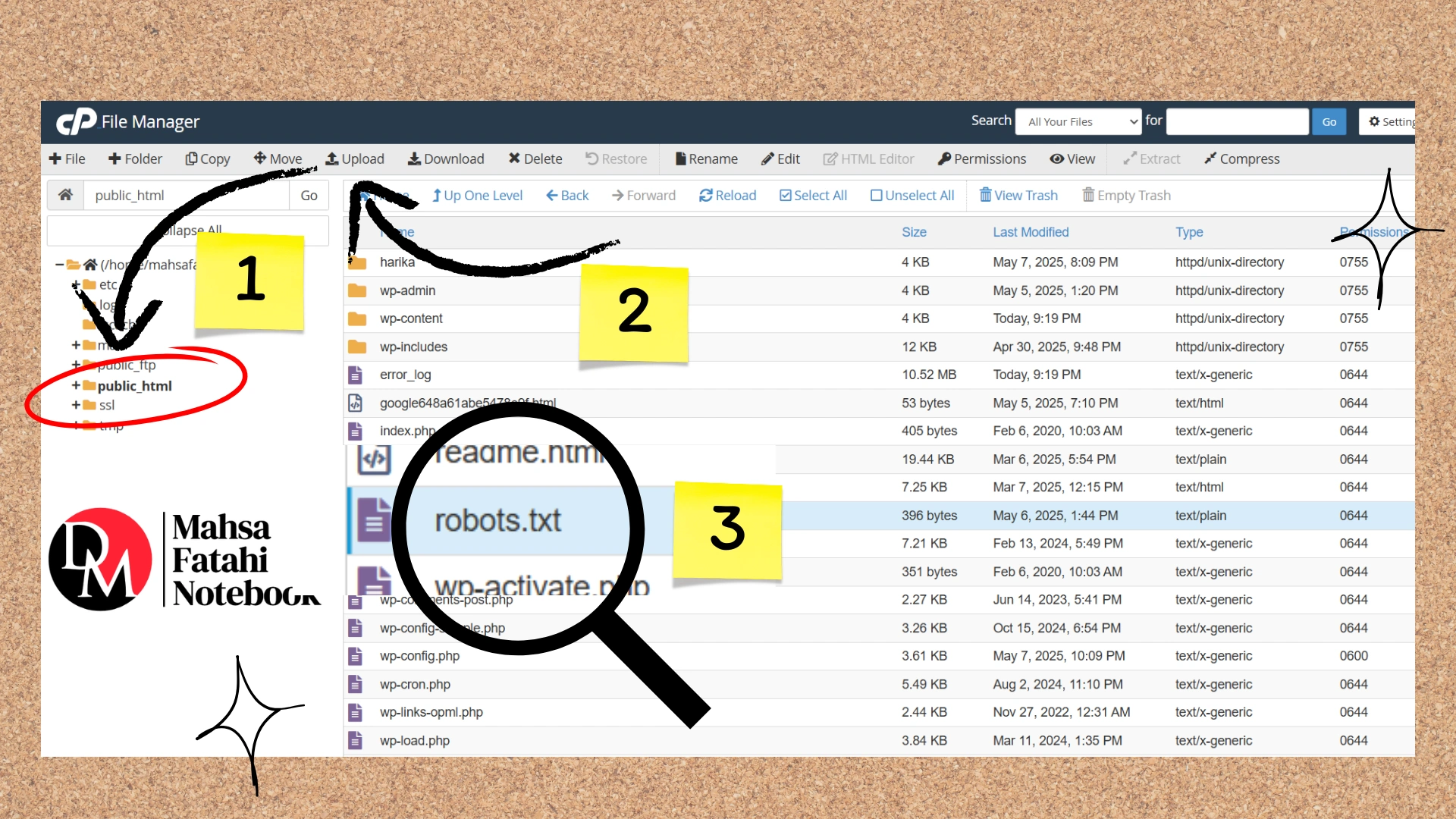

How to Add Robots.txt to Your Website

Now that you’ve finished the process to create robots txt, take the following steps

- Go to your hosting control panel (like cPanel).

- Navigate to the public_html directory.

- Upload the robots.txt file there.

Then, test the correction of the process you followed by visiting the robots txt url:

https://yourdomain.com/robots.txt

How to Create Robots txt: Best Practices to Follow

Here are some essential robots txt guidelines to follow:

- Always include your sitemap URL for better crawling.

- Avoid blocking important assets (like JS or CSS) unless absolutely necessary.

- Never use robots.txt to block private data—use proper authentication instead.

- Test your file in “robots.txt Tester” tool before publishing.

- Keep your syntax clean and easy to read—bots follow strict robots txt format.

- Use specific user-agents only when needed to avoid unintended blocking.

Free Download: Ready-Made Robots.txt File for Your Website

Want a ready-to-use file? Here’s a robot txt template you can download and customize based on your CMS or website’s needs.

Common Mistakes When You Create Robots txt

Avoid these common pitfalls:

- Blocking your entire website by: Disallow: /

- Forgetting to update the sitemap URL

- Blocking critical resources like /wp-content/uploads/

- Mixing uppercase and lowercase paths (they are case-sensitive)

The robots.txt file might be small, but its impact on Digital Marketing and SEO is huge. Whether you’re looking to optimize your crawl budget, hide irrelevant pages, or enhance user experience through better bot management, learning how to build robots txt is a must for every site owner.

If you’re unsure how to configure the best robots txt for SEO for your site—or need someone to audit your entire SEO structure—I can help.

I’m an experienced SEO specialist offering freelance services to businesses and individuals. If you’re looking to hire SEO specialist who understands both strategy and technical SEO, feel free to reach out through the contact page.